Why PyTorch is an amazing place to work... and Why I'm Joining Thinking Machines

In which I convince to you to join either PyTorch or Thinking Machines!

Note: This is a revised (and extended) version of the note I posted internally when I decided to leave PyTorch. I’ve gotten a lot of questions, and I hope that this post will both 1. answer those questions, 2. convince you that working at PyTorch or Thinking Machines would be a great idea :)

Well, there’s no easy way to say this. After about 4 years of working at PyTorch, I’ve decided to leave PyTorch to be a founding engineer at Thinking Machines. In that sentence, I would emphasize “be a founding engineer at Thinking Machines” far more than “leave PyTorch” - I have (and continue) to enjoy working on PyTorch, and would have gladly worked here another 4 years.

At several points over the last several years, I’ve talked to folks that have expressed surprise that I’m still at PyTorch. Not to brag, but it certainly wasn’t for lack of opportunity - I’ve been offered roles at OpenAI/Anthropic, I was recruited to be a founding engineer at {xAI, SSI, Adept, Inflection, etc.}, I’ve been offered many other startup roles at other startups you likely know, etc. With hindsight, many of these opportunities would have led to much greater compensation, but I’ve never regretted staying at PyTorch.

Let’s talk about why I’ve enjoyed working at PyTorch for 4 years, and what compelled me to go to Thinking Machines.

Apologies in advance for this note, it’s a self-indulgent and personal post. But I only get to write this once!

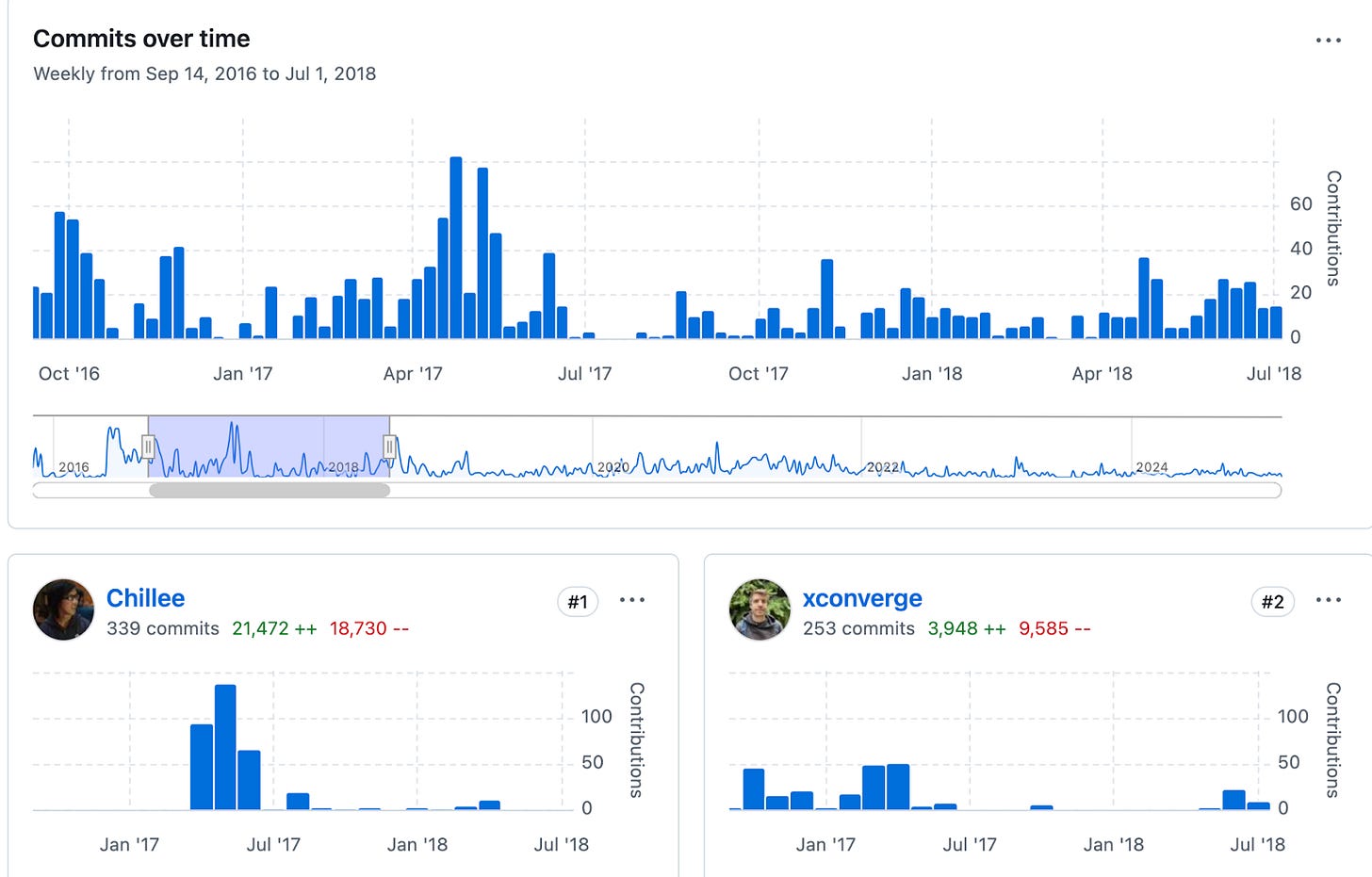

How I ended up at PyTorch

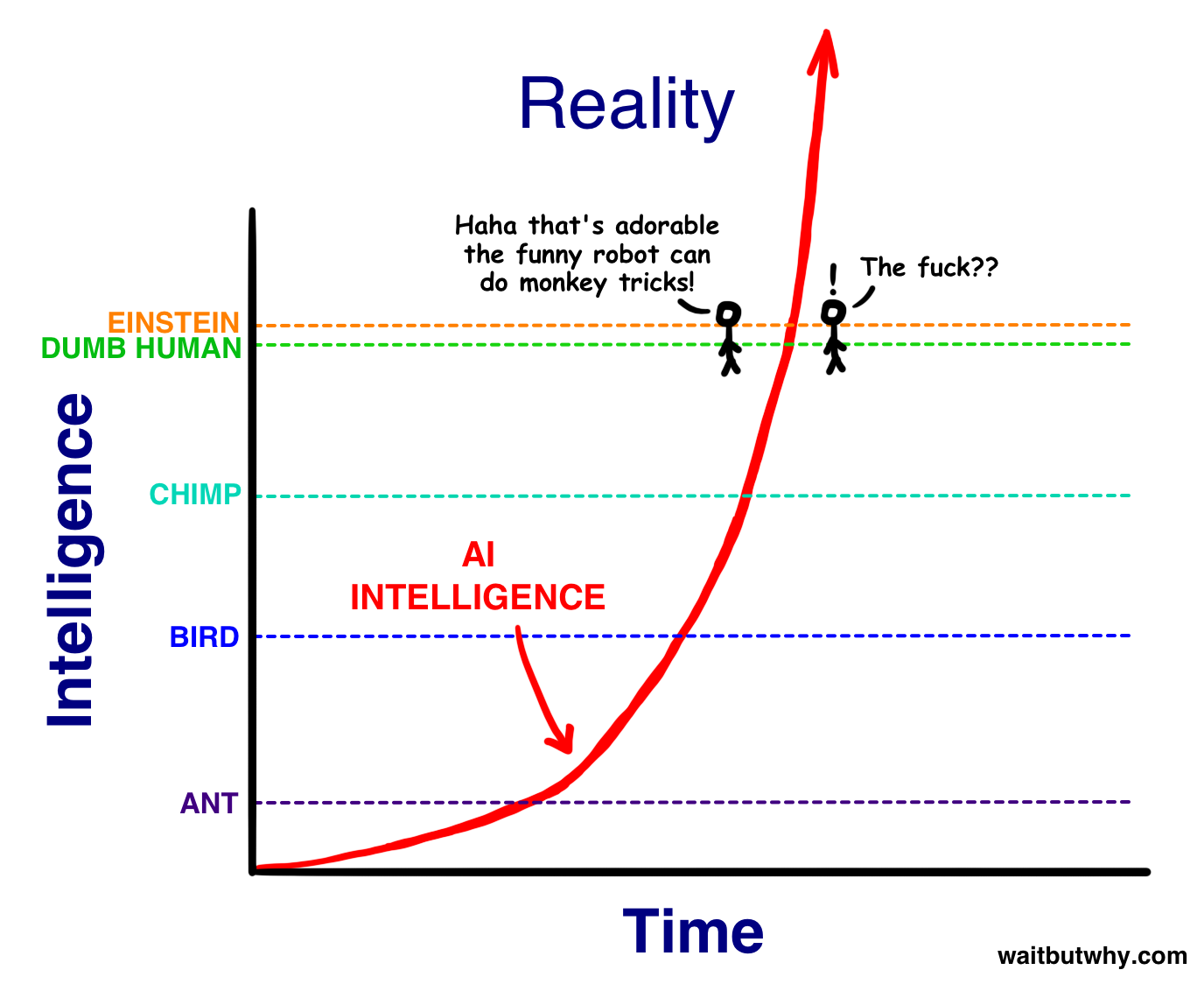

I think it’s fair to call me an AI “true believer”. Ever since I saw AlphaGo in high school and read the WaitButWhy AI post (not totally sure it holds up 10 years later), I was convinced that AI would be the most important technology of my lifetime. Correspondingly, from the time that I started college in 2016, most of what I did was AI-related. I took ML classes, I founded an undergraduate ML research club, I published papers, I even met my girlfriend (now fiancee!) through doing ML research together!

However, there were several things that didn’t totally satisfy me about just doing ML research.

For one, although I was publishing papers and such, even back then, it wasn’t super clear to me whether any research I did was actually “meaningful”. In research, one demoralizing aspect is that, looking back, 99% of papers don’t end up on the “critical path” to what actually works. Cynically, any PhD spending their time on n-gram models wasted their time - their papers and theses relegated to the dustbin of history. Although it’s true that even papers that aren’t on the critical path can still be useful and necessary (e.g. demonstrating limitations of existing approaches, setting up baselines for new approaches to surpass), this worry constantly nagged at me.

Second, I was never able to adapt well to the “running experiments” mode of ML experimentation - my working style is somewhat irregular, with lots of thinking punctuated by lots of coding. On the other hand, I think tremendous discipline is required to be a great ML experimentalist - it’s a constant loop of “come up with hypothesis” => “run experiment” => “get result of last experiment back” => “come up with new hypothesis”, often pipelined several stages deep. In ML research, you have a physical constraint (GPUs) and to be a good researcher you must get good experiment utilization out of it.

Overall, I ended up gravitating much more to “systems”. Not only was it an area that I thought played well to my strengths, I had always admired the impact of systems. Instead of needing to directly deliver the impact, you could instead improve the impact of thousands or millions of people by 5% instead!

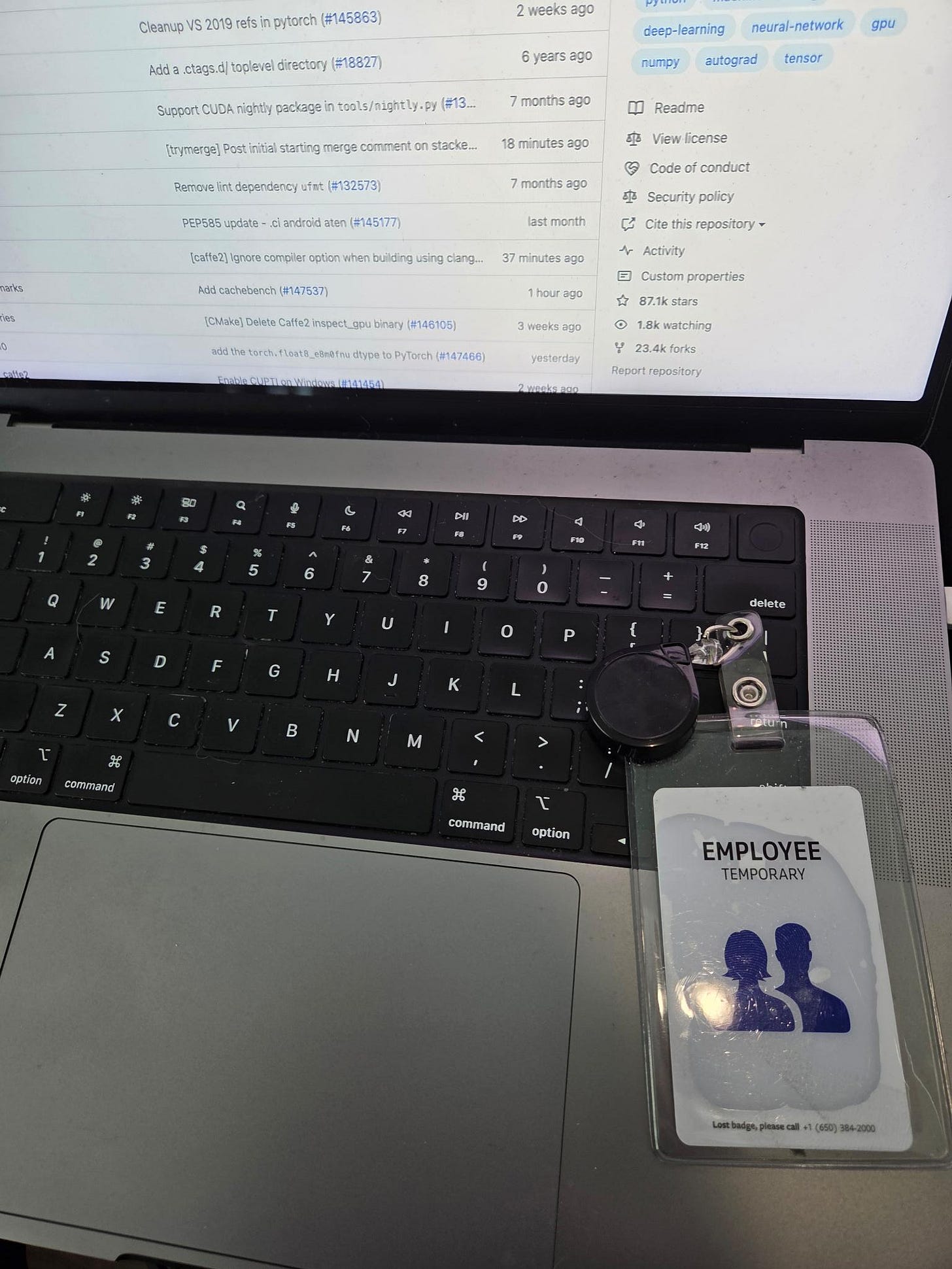

So, I ended up with my career plan - instead of directly working on advancing ML development, I would instead work on building infra to help others advance ML development. A bunch of other things happened in-between, but that’s how I ended up on PyTorch.

Why PyTorch is an amazing place to work

PyTorch’s Impact on the Industry

As the field (and money!) has exploded over the last 10 years, I think it’s easy to lose track of just how much impact PyTorch has had. Perhaps the most obvious tracker of the money in the field is Nvidia’s stock price, primarily driven by growth in server-side GPU sales.

I think it’s reasonable to guess that at least 75% of these GPUs are running some kind of PyTorch code.1 That’s insane. Nvidia has gained something like 3T dollars of market cap, and PyTorch was crucial for much of it.

Moreover, among the ML community broadly, PyTorch remains the lingua-franca. 59% of research papers tracked by Papers With Code use PyTorch (with 29% not using any ML framework), the vast majority of models on Huggingface (90%+?) are on top of PyTorch, and the most popular inference servers are also built on top of PyTorch (vllm and sglang).

Even at the leading AI labs, pretty much all of the companies using GPUs use PyTorch. OpenAI, Mistral, Deepseek, and Meta primarily use PyTorch (and GPUs). Anthropic also primarily uses PyTorch for GPUs, and xAI (which uses Jax for training on GPUs) also uses PyTorch for inference (through sglang)!

In high school, one of the things I feared most was that I would work on some project for 10 years and eventually realize that I’ve wasted my life improving something that nobody cared about. One of the greatest things about working on PyTorch is the certainty that I haven’t.

PyTorch’s Impact on Me

I’ve spent my entire career (thus far) on PyTorch, and so, outside of the overall impact of PyTorch, I want to talk about why I’ve enjoyed the day-to-day so much.

Mission Alignment

One of the greatest things about startups is “mission alignment”. Because so much of your comp is tied to equity upside, there’s no difference between “my coworker wildly succeeded” and “we all wildly succeeded”. On the other hand, at a large tech company, people’s compensation is primarily tied to their individual performance rating (and promos). So, if you start working on an approach, and somebody else comes up with a different approach that’s wildly successful (and supersedes yours), your performance rating is likely to suffer, and you probably won’t get promoted.

At PyTorch however, many of the people on the project are mission aligned - they do genuinely care about the overall success of PyTorch and its impact on the ML ecosystem. I certainly wouldn’t say it’s 100% of people on the team, but it’s enough (especially among more senior folk) to make it a much more pleasant experience.

A True Commitment to Open-Source

Soumith (and other folks in leadership) have done an exceptional job in cultivating a culture where OSS is valued at Pytorch. There are many other projects that happen to be OSS, but you can often only get promoted and have impact through prioritizing internal projects. This isn’t true at PyTorch - I would say that I’ve spent my entire time here primarily focused on OSS impact, and I’ve been successful in terms of ratings and promotions. (Of course, there are other folks primarily focused on internal impact who have also been very successful).

There are other aspects in which valuing OSS leads to a much healthier project.

Ungameable Impact

One phenomenon at large tech companies that I don’t really like is what I call “roadmap-driven adoption”. This is where two managers/directors/VPs get together, agree that X should be used (potentially killing other project Y), and then the adoption of a project is laid out in roadmaps of several teams.

While this certainly has its advantages (and in some cases is entirely necessary), I find that projects adopted this way are often … subpar. Moreover, it’s not uncommon for the success of these projects to be a facade - they continue as long as some VP is sponsoring the project, but eventually people get fed up with it, the VP loses a political fight, or the VP simply changes their mind. Basically, in roadmap-driven development, the most important component is convincing some “key stakeholders” that your project should be adopted.

On the other hand, OSS impact is truly a free market. Open source users couldn’t care less if Mark Zuckerberg is throwing his entire support behind a project. OSS users only care that 1. you’re solving a problem they have, and 2. They like using your software.

Mike Schroepfer (former CTO at Meta) provided a similar sentiment here. I can’t even imagine how hard it is to get “real” feedback as a CTO, where everybody you talk to knows that you can be single-handedly responsible for their promotion or bonus. OSS is refreshingly ungameable.

Amazing Opportunities for Career Growth

Even from a purely selfish perspective, it’s hard to imagine a place with more opportunities for career growth. Folks can often get tunnel vision on internal promotions. But, a much bigger driver of career growth is often “what do folks across the industry think of you”. Objectively speaking, I’ve gotten incredible offers as founding engineers at startups (SSI, xAI, Thinking Machines) as well as great opportunities for (fairly) high profile talks (Jane Street, a couple lectures at universities, etc.). Even among big tech companies, at some point a director offered me a 2.5 level jump to go work at Google.

Frankly, it’s embarrassing to write the above paragraph - there are definitely other folks on PyTorch who are just as good or better than me, but due to my focus on OSS and public presence (twitter/blogs/etc.), I’ve become fairly well known. But… this is somewhat of a “sell-post” on PyTorch, so I’ll allow it :)

I would say that just working on PyTorch alone is a great opportunity. Andy Jones (at Anthropic) once wrote an essay whose top line was “If you think you could write a substantial pull request for a major machine learning library, then major AI safety labs want to interview you today.” Obviously, if you work on PyTorch, you qualify for this.

But where Pytorch truly excels is the opportunity for engineers to have industry-wide impact (and to get recognized for it!). It’s very rare that most people in the most important field of our time use your software - I’d recommend taking advantage of it.

Last note - even for internal work, I find broad OSS impact is very helpful. Especially when it comes to cross-team collaboration, one of the most important currencies is “legitimacy”, and OSS impact is far more likely to confer legitimacy than internal impact. I’ve benefited significantly from this.

Interesting Technical Work

One fear of many engineers is that they won’t be able to solve interesting technical problems - there’s no shortage of that on PyTorch. There’s projects that have implemented a python bytecode interpreter JIT for machine learning (TorchDynamo), projects on reaching speed-of-light for matrix multiplications, projects where you regularly need to dive into PTX documentation (perhaps this one isn’t appealing hahaha), projects all about reasoning over symbolic shapes (sympy, z3, etc.), and so many more.

There’s also no shortage of problems to be solved 😛

Consider Working on PyTorch!

Perhaps a year ago I was talking with Adam Paszke about working on ML frameworks, and we agreed that it was surprising to us that given how sweet the gig is, it was surprising to us that more people didn’t want to work on ML frameworks. This post is (partially) my attempt to remedy that.

If any of the above sounds appealing to you, consider working on the Pytorch Core2team (primarily under Gregory Chanan)! I would recommend contacting/emailing Soumith Chintala at soumith@meta.com. Although I will (sadly) no longer be on the team, there are many other amazing people to work with (and I plan to continue to be in regular contact with the team).

From my perspective, an ideal candidate would:

Have an inherent curiosity in the field of machine learning: Machine learning moves very quickly, and one characteristic I’ve found most valuable is a general knowledge of what’s going to be useful next. For me, I found the years of just doing pure ML research (using PyTorch) to be extremely helpful in understanding what should be built.

Be highly agentic: Although having ideas on what should be done is an important characteristic, it’s perhaps even more valuable to just “do it”. PyTorch, in many ways, is a very “bottom-up” org. Although there’s always roadmapping and such, the most impactful projects were never on the roadmap.

Why I’m Excited About Thinking Machines

Given that I just wrote way too much about why I loved working on PyTorch, why did I join Thinking Machines? Moreover, why was Thinking Machines the opportunity that convinced me?

A Group of People I Would Very Much Like to Work With

As everyone knows, a startup is nothing without its people. And Thinking Machines sure has some pretty good people!

You have the folks responsible for the “research preview” that’s kickstarted this situation (John Schulman, Barrett Zoph, Luke Metz), you have folks leading pretraining efforts at Meta, OpenAI, Character.AI, etc (Sam Schoenholz, Naman Goyal, Myle Ott, Jacob Menick), you have folks leading multimodal efforts at OpenAI/Mistral (Alexander Kirillov, Rowan Zellers, Devendra Chaplot), you have extremely good infra people (Andrew Tulloch, Yinghai Lu, Ian O’Connell, etc.), and of course you have the former CTO (and brief CEO :^)) of the biggest AI company in the world (Mira Murati).3

However, perhaps even more so than the strength of the team, I was impressed by the friendliness of the team. Certainly, the fact that I had previously enjoyed working with several of these people helped. Of the top 4 people who I’ve been saddest about leaving Meta, 2 of them (Andrew Tulloch4 and Yinghai Lu) are at Thinking Machines.5

An Amazing (and Asymmetrical) Opportunity

One unfair advantage about being a founding engineer at a startup (especially one that’s so clearly a “good” opportunity) is the asymmetrical opportunity cost. 6

For example, if I join Thinking Machines as a founding engineer, and then in a year decide I was massively mistaken and go to another lab, it’s not likely that my role would change all that much! I would be joining an established company, and the role would likely be fairly similar to what it is today. Somewhat macabrely, just like the prodigal son, it might even be beneficial to have left.

However, if I declined now but then joined Thinking Machines in a year, my role would be drastically different. Of course, my compensation would change, but more importantly, I would have far less legitimacy and influence. The culture and direction of a company is largely set by the founding team, and that’s something I don’t have the opportunity for at OpenAI or Anthropic.

An Approach to Positive AI Outcomes that Resonated with Me

Perhaps most importantly, however, was that Thinking Machines’ approach to positive AI outcomes - research/product codesign and open science - resonated with me. As mentioned above, I’ve been convinced since high school that AI would be the most important technology of our lifetime. However, this is not the same thing as saying it’ll be the most beneficial.

Note: Don’t take the below statements as speaking on behalf of Thinking Machines - although I’ve talked to members of the team about these various topics, this is entirely “my stance” and “why does my stance lead to me joining Thinking Machines”. I also don’t have the space to give this topic a more in-depth treatment, so view it more as an “explanation of my beliefs” as opposed to a watertight argument.

Generally speaking, I would consider myself a techno-optimist. That is, I believe that humans’ lives have gotten drastically better over the last 1000 years, and that this has been largely driven by technological innovation. I’m thankful for the modern supermarket, I’m astounded by semiconductor manufacturing, and I’m especially grateful for air conditioning. In many ways, AI is the most techno-accelerationist technology the world has ever seen - a single technology that has the potential to solve every other technical challenge we face. Because of this, the potential positive impacts of AI are worth pursuing - I like Dario’s piece “Machines of Loving Grace” for a more in-depth look on what that might look like.

Of course, bad outcomes are possible, and due to the potential impact of AI, bad outcomes seem far worse than with other technologies.

In general, I’ve categorized bad AI outcomes as:

Misuse: Bad people use AI to do something bad7

Misalignment: Good people use AI, but the AI itself ends up doing something bad.8

Societal Impacts: People are good, AI is good, but we end up in a bad outcome anyways.9

Although all 3 are reasonable concerns, I would say I’m most concerned with “Societal Impacts”.10

The primary reason for this is that society naturally has a strong “immune response” to Misalignment and Misuse. When it comes to potentially harmful technologies, society has a clear playbook - if something bad happens, increase the restrictions (e.g. regulate GPUs) or regulations (e.g. mandate more safety oversight).

Of course, AI is not a normal technology, but concretely speaking, I think there will be plenty of warning signs before truly catastrophic misuse or misalignment. Even if the AI bides its time before misalignment (e.g. deceptive misalignment or a treacherous turn), I find it unlikely that the first AI system to do so will succeed - it would need to be drastically more powerful than humans and other AIs.

On the other hand, negative Societal Impacts seems much more straightforwardly plausible. Imagine a world where ChatGPT was never released, but instead hypothetical AI lab ClosedAI releases AI Job Replacement Agent 3000 in 2030, instantly replacing 50% of all human jobs and turning ClosedAI into a quadrillion dollar company.

Even in today’s world, the secrecy of the top AI labs definitely rubs me the wrong way (although I understand why it’s done) - I don’t know how much more vagueposting I can take. Moreover, the ideological and geographical concentration of AI knowledge doesn’t seem ideal - as AI expertise becomes more and more in-demand, the fact that the vast majority of AI secrets are contained in a 50 mile radius around San Francisco leads to both power-imbalance and a monoculture.

If we need to align AI to human values, should all of those humans live in San Francisco?

Why I’m Compelled by Thinking Machines’ Mission

Broadly speaking, there are 2 main aspects of Thinking Machines’ mission that were compelling to me.

A Focus on Product and Broad AI Diffusion

In my opinion, one of the most important aspects for broader societal stability is how smoothly society transitions to using AI systems. Just as important as the outcome is how people feel we arrived at that outcome.

For example, ChatGPT did not really blow many ML researchers’ minds - they’d seen GPT-3, they’d seen what GPT-3 prompting could do, ChatGPT was just a convenience feature. However, ChatGPT absolutely blew the rest of society away. This was the first time broader society became aware of all the things a SOTA LLM could do, and society was shocked. However, since, ChatGPT has become much more normalized among broader society - folks have a bit of a hedonic treadmill.

But, there is much more that can be done. Even today, there is a vast gap between what the layman encountering ChatGPT for the first time can do vs. those who have deeply integrated AI into their workflow.

Moreover, I believe there is a lot of potential towards building AI products that can help out people collaboratively as opposed to fully autonomous AI agents. One cute way I thought about it was “Maximize the value of labor instead of capital”.

Open Science and Systems

As mentioned above, it doesn’t seem good for society for the knowledge of how these AI systems are built to be so secretive. Not only does it create resentment towards these AI labs, it also makes it much more difficult to society to build on top of these AI systems! For example, Deepseek’s recent releases (both their papers and code) have helped the broader community develop a much better understanding of what will be useful moving forward (e.g. Online RL).

Personally, of course, this was a big part of my motivation for PyTorch. Good open-source systems help out the entire ecosystem, enabling many more people to participate in building AI systems.

I also want to note that although open science/systems is certainly a nice ideal, there’s obviously also economic realities at play. In my opinion, this is where the focus on product is useful. Companies like Meta or Google don’t need to be very secretive about the actual techniques being used - more or less, most of their core systems/approaches are widely known by the community. On the other hand, if your only product is just an API endpoint with tokens in and tokens out, your only edge is your model’s exact capabilities.

The culture and defaults of a company also matter a ton. There are many things at these AI labs that could be OSS’ed without affecting their competitive edge - they just don’t do it because the default is closed and they’d need to argue why it should be open.

For example, PyTorch is the opposite here. All of our code is open-source, our roadmaps are open, some of our design meetings are open too. So, one must argue why something should be closed if you don’t want it to be open.

Based off of Sam Altman’s comment here, he thinks that OpenAI should be open-sourcing more things. However, it’s “not [the] current highest priority”.

Overall Thoughts on Positive AI Outcomes

Overall, I think Thinking Machines mission of broad AI diffusion and collaborative open-science seems like a compelling strategy to help address Societal Impacts. Of course, there are other essential approaches (like Policy), but Thinking Machines’ mission personally resonates and is an area I believe I can contribute to.

Final Thoughts

The opportunity to join Thinking Machines as a founding engineer hit basically all of my checkboxes.

An extremely strong team, with folks I’ve personally enjoyed working with before and other folks I think I’ll enjoy working with.

The opportunity to be there from the beginning and have a say in the direction and culture of a very exciting company.

A mission (product-focus + open science) that was uniquely compelling to me in leading to better AI outcomes.

Finally, as a bit of a gut-instinct thing, the open science/systems aspect allows me to continue some of what I enjoy about my role at PyTorch - talking to people about AI systems and having broad impact with open-source code.

Almost none of my previous opportunities hit even 2 of these boxes, let alone all 4. One point I distinctly remember when considering this was: “If even this opportunity doesn’t compel me to leave PyTorch, I should/would probably work on PyTorch forever”.

Although it was a very difficult decision, I’m very excited to build some cool stuff at Thinking Machines!

Come work at Thinking Machines!

If any of the above sounds compelling to you (or if you want to work with me :P), please email me at horace@thinkingmachines.ai or DM me on twitter!

At the very least, I think it’ll be a lot of fun!

Acknowledgements

I’d like to thank everyone I talked to while making this decision, all of which provided me a lot of insight. I’d also like to thank my coworkers at PyTorch for making my last 4 years so enjoyable.

The rest would be a combination of A. other ML frameworks, B. workloads running raw CUDA (like HPC often), and C. pure C++ offerings like TensorRT

There are other fun teams under the broader PyTorch org, but the one I worked on is PyTorch Core (specifically PyTorch Core Compilers). While some aspects apply on other teams as well, I think the OSS presence is strongest on the PyTorch Core team.

There are other folks at Thinking Machines who I hear are also extremely strong (e.g. Alex Gartrell was lead of Operating Systems at Meta and Sam Schleifer was one of the first employees at Character.ai) - this is mostly just the folks that I’d heard about prior to joining.

A funny story about Andrew Tulloch leaving Meta to go to OpenAI is that in a weird way, it actually reassured me about staying at Meta. I thought Andrew Tulloch was one of the strongest engineers at Meta, so if he went to OpenAI and just ended up being a run-of-the-mill engineer there, it would have really made me feel like Meta was a small pond. However, by all accounts, he was one of the best engineers at OpenAI too.

For what it’s worth, one of the other ones (Natalia Gimelshein!) is back at PyTorch :)

Of course, this is not the first time I’ve heard (or thought) about this argument. There are 3 main counterarguments for why this may not be a compelling argument:

Although the project (PyTorch) will almost certainly still be around in a year, the specific projects and directions I’m pushing for will not. Concretely, one of the things that made me most hesitant to leave PyTorch was that there were a couple of directions I was strongly pushing for that where I’m more uncertain about their success with me leaving.

Although moving around may certainly lead to short-term career (and knowledge) gains, ownership and legitimacy can only be obtained through sticking with a project for a long period of time. Sometimes I think about how it would be fun to spend 6 months at each AI company to understand how they operate. However, this wouldn’t work out well in the long run - mercenaries may be valued but never respected.

Perhaps most sappily, it does feel a bit like a relationship breakup. I know people say that you should just treat a job like a job, but I can’t help it haha. I really like the people I’ve worked with.

Basically, for me, this argument was most compelling for why I should join Thinking Machines now compared to OpenAI/Anthropic/xai.

Misuse is probably the most straightforward bad outcome from AI - a bad person uses AI to do something bad. Just like guns enable a single human to cause far more harm than if they only had knives, a very capable AI system may enable a single human (or terrorist group) to enact far more damage than they otherwise would have.

Others also worry about authoritarian countries leveraging AI to make a super-surveillance state.

Misalignment is when the folks using AI may have good intentions, but due to reward hacking/goodharting/instrumental convergence, the AI ends up achieving some other goal that might be bad. This is also often called “paperclipping”.

Although there are certainly versions of this that may seem extremely “sci-fi”, there are plenty of more prosaic examples. For example, humans certainly didn’t intend to pollute the environment, but nevertheless, it occurred as a side-effect of other things we did intend (factories).

This is essentially any negative impact on society that doesn’t come from the other two - job loss, power-imbalance, economic disempowerment, monocultures, etc. Perhaps you could call this “human misalignment” as opposed to the above “AI misalignment” :)

If you forced me to give a number, I would say 50% Social Impacts, 25% Misuse, and 25% Misalignment

![How lucky I am to have something that makes saying goodbye so hard" Winnie the Pooh/A. A. Milne [496 x 454] : r/QuotesPorn How lucky I am to have something that makes saying goodbye so hard" Winnie the Pooh/A. A. Milne [496 x 454] : r/QuotesPorn](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F9836c3f3-6044-4c0c-8139-e882a7c61985_496x454.jpeg)

Onwards and good luck!

Congratulations!!!